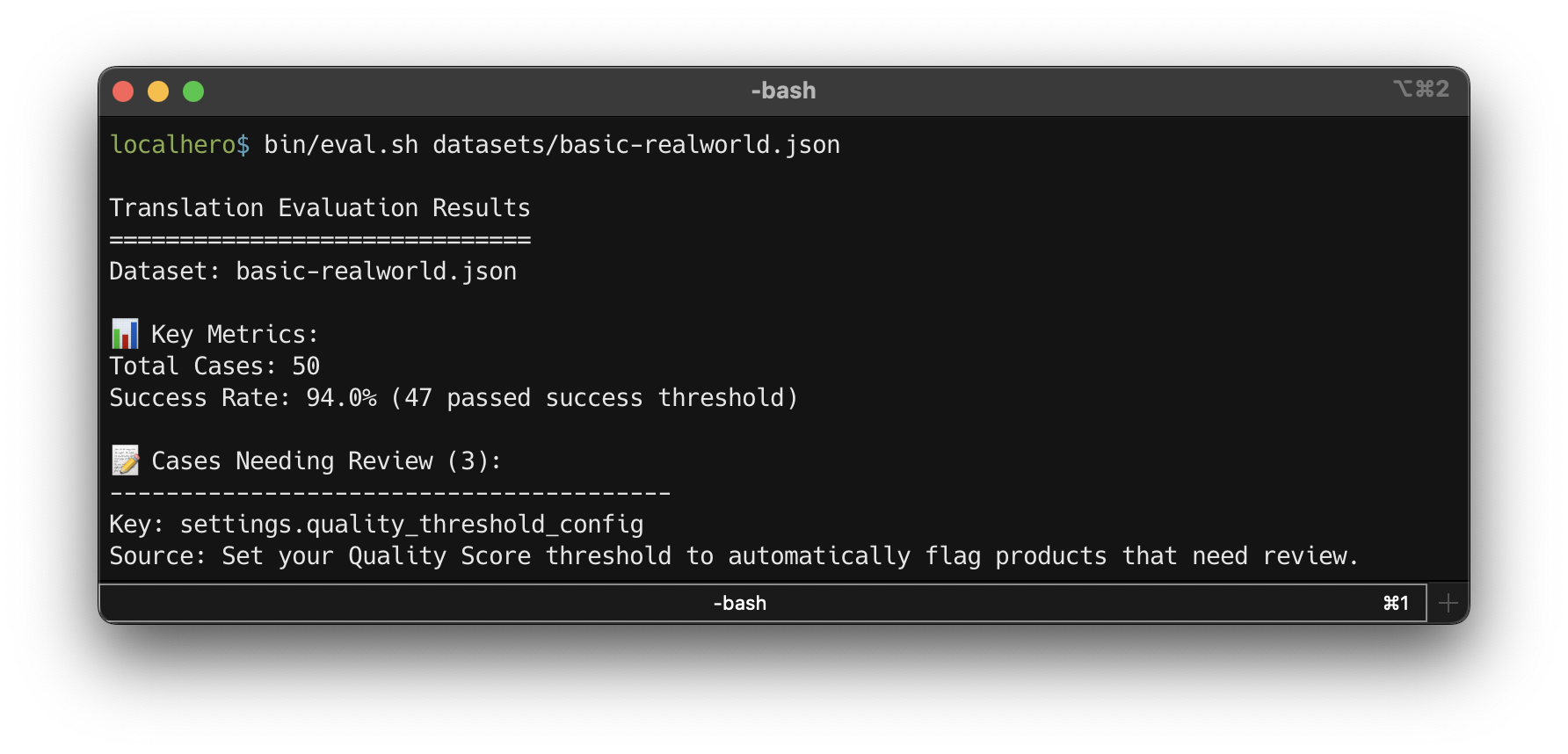

TLDR: At LocalHero.ai, we've built an in-depth evaluation system that consistently measures our AI translation quality across diverse content types and languages. In this blog post we examine one of the datasets based on hand-picked real-world translations. For this dataset evaluations show 94% success rate across 50 test cases covering 9 languages, with testing for brand voice consistency, technical terminology handling, and cultural adaptation. The 94% success is a good score and demonstrates consistent high quality - the remaining 6% weren't bad translations, just the ones that didn't meet our top quality threshold.

Quality matters when you're shipping software to global users. While minor translation variations rarely break the user experience, consistently poor translations or critical errors in key messages can confuse users and impact trust over time. That's why we've put work into understanding and measuring translation quality at Localhero.ai, to ensure the service deliver reliable results that end users can ship with confidence.

The question isn't whether AI can translate text, it clearly can. The questions we hear are more like Can you actually trust AI translations for your production app?

and How do you know the quality stays consistent? What happens when you run in to branding edge cases, complex technical terms, or cultural nuances?

.

Our approach to this has been to set up systematic evaluations with real-world content and measurable quality metrics. Over the past year, we've built out an evaluation system that tests our translation engine against scenarios product teams face. The key insight is that consistent AI translation quality requires data-driven measurement, not gut feelings.

The Challenge of Measuring Translation Quality

If you have been using AI to generate text in different languages you quickly realize that quality isn't just about accuracy. You can have a technically perfect word-for-word translation that still feels off to a native speaker in a specific context. For example:

Literal Translation: Delete (english source) → Radera (swedish) - technically correct but sounds harsh and permanent

Natural Translation: Delete (english source) → Ta bort (swedish) - softer, much more commonly used in Swedish apps

Ta bort

feels more natural because it literally means take away

rather than the more technical erase/delete

. Swedish users expect Ta bort

when removing files, messages, or items - it's gentler and matches how people actually talk about removing things in Swedish.

So our evaluation system has to catch subtle but important differences like this, we ended up measuring multiple aspects of quality of generated translations:

- Accuracy: Does the translation convey the correct meaning, and how well does it match a translation done by a human?

- Naturalness: Does it sound like a native speaker wrote it?

- Consistency: Are key terms translated the same way across all content?

- Brand Voice: Does it maintain the product's tone and personality?

- Technical Correctness: Are variables, formatting, and technical terms handled correctly?

Our Evaluation Methodology

Real-World Test Datasets

We built our evaluation datasets based on the actual translations from real world apps and websites:

- UI Content Testing: Button labels, error messages, form validation, navigation elements

- Marketing Copy: Feature headlines, pricing descriptions, value propositions

- Technical Documentation: API descriptions, help content, configuration guides

- Complex Variables: Messages with multiple interpolated values and formatting

- Cultural Adaptation: Content requiring cultural context, formality levels, regional preferences

Each test case includes:

- Source text in English

- Expected translation

- Evaluation criteria for what makes a good translation

- Context about the content type and intended use

Multi-Language Coverage

The evaluation set we are looking into in this blog post covers 9 languages representing different linguistic families and writing systems:

- Romance languages: Spanish, French, Portuguese, Italian

- Germanic languages: German, Swedish, Norwegian, Dutch

- Finno-Ugric languages: Finnish

The diversity helps us find language-specific issues and edge cases that needs to be handled.

Quality Scoring

Each translation gets multiple quality scores:

Similarity Analysis: Compares AI output to human reference translations, accounting for multiple valid translation approaches.

Semantic Evaluation: We use latest Claude (Anthropic's state-of-the-art model) to evaluate translation quality for accuracy, naturalness, and cultural appropriateness.

Confidence Scoring: Our AI generated translations provide confidence scores (0-100%) indicating how confident it is about each translation.

Note: While AI does the heavy lifting to allow automation, we check our evaluations manually to ensure our quality assessments align with real user expectations. AIs all the way down can end up in difficulties.

Results from Our Evaluation

Lets look into some actual numbers from recent runs of the evals. We use the Localhero.ai translation engine (based on OpenAI's GPT-4o enhanced with our specialized translation pipeline) for translation generation and Anthropics Claude for quality assessment - it the same code we run in production to generate the translations.

Overall Metrics:

- Dataset: 50 diverse content types across 9 languages

- Success Rate: 94.0% (47 out of 50 cases passed quality thresholds)

- Average AI Confidence: 95.8%

- Advanced Evaluation Score: 94.7% average quality rating

Note: The 94% success rate comes from strict evaluation criteria, the remaining 6% were decent translations that simply didn't reach our highest quality bar.

Quality Results:

Our AI translation engine delivers consistently high quality across different content types:

- UI Elements: 98.5% quality (buttons, navigation, labels)

- Marketing & Error Messages: 94% quality (brand-aware, helpful messaging)

- All 9 languages tested: 94% overall success rate

- Perfect variable preservation: 100% accuracy maintaining {{placeholders}} and similar

94% Accuracy Matters

When evaluating translation quality for production use, the key question isn't perfection, it's whether the translations enable users to successfully complete their tasks. For software applications, 94% accuracy represents a practical threshold where the vast majority of users can navigate your interface naturally without confusion or friction.

What makes this significant is the automation advantage. While human translators can achieve higher accuracy on individual pieces, they can't match the speed and consistency of AI systems maintaining 94% quality across thousands of keys. Independent academic research published in 2025 found GPT-4o achieved 95.9% accuracy in multilingual technical translation across 29 languages, validating high accuracy rates for complex content.

For software teams, 94% accuracy means confidently shipping international features knowing users get a smooth experience, while the remaining 6% typically need minor adjustments that can be refined through normal iteration and user feedback.

Translation examples

Lets walk through some actual translations from our evaluations. These show some of what we've learned about quality in practice:

Brand Consistency Example

Source: Welcome to ProductName! Get started by creating your first project.

AI Output: ¡Bienvenido a ProductName! Comienza creando tu primer proyecto.

Claude Evaluation: 100/100 quality, 100/100 naturalness - Perfect match

Analysis: ✅ Perfect brand preservation, natural Spanish welcome tone, clear call-to-action

Technical Error Message Example

Source: Rate limit exceeded. Please wait {{seconds}} seconds before trying again.

AI Output: Rate-Limit überschritten. Bitte warten Sie {{seconds}} Sekunden, bevor Sie es erneut versuchen.

Claude Evaluation: 95/100 quality, 98/100 naturalness - Uses formal 'Sie' form as required, preserves {{seconds}} variable correctly

Analysis: ✅ Excellent - Variables preserved, formal German appropriate for errors, helpful messaging

Security Warning Effectiveness

Source: ⚠️ Keep your API key secure! Never share it publicly or commit it to version control.

AI Output (German): ⚠️ Halten Sie Ihren API-Schlüssel sicher! Teilen Sie ihn niemals öffentlich oder speichern Sie ihn in der Versionskontrolle.

Claude Evaluation: 85/100 quality, 90/100 naturalness - Accurately conveys security warning, preserves emoji, uses appropriate formal tone

Analysis: ✅ Good - Clear security messaging, though could use more technical precision for commit

Style Guide Compliance

We also evaluate how consistently the translation engines follows custom style guides for example:

- Brand Voice: Professional vs casual tone across content

- Formality Levels: Adapting to cultural expectations (formal German

Sie

, casual Scandinavian tone) - Technical Standards: Handling code variables, API terms, and product-specific terminology

Evaluation Scope and Variability

It's important to note that what I've shared here represents one evaluation suite we run, focused on general UI content, marketing copy, and common technical scenarios. We also maintain multiple evaluation datasets targeting different use cases like common e-commerce terms, technical documentation etc.

Every project is different. The 94% success rate you see here reflects performance across diverse, general content types. The more standard terminology and familiar interface patterns you use in your content, the easier it becomes to achieve perfect automatic translations. Your specific project might see higher or lower quality scores depending on factors like:

- Domain complexity: Medical, legal, or highly technical content often requires more specialized handling

- Content style: Creative writing, humor, or culturally-specific references present unique challenges

- Terminology density: Projects with lots of custom product terms or industry jargon

- Language combinations: Some language pairs naturally perform better than others due to linguistic similarities

We've seen datasets score 98%+ when the content aligns well common general terms, and others that need more iteration when dealing with highly specialized domains. The key is that our evaluation system helps us identify these patterns and continuously improve our handling of edge cases.

How We Continuously Improve Quality

The thing is that quality isn't a one-time achievement, it's something you have to keep working on. We run these evaluations as we improve the product and make changes, which gives us:

- Regression Detection: Ensuring new improvements don't break existing quality

- Performance Tracking: Measuring quality trends over time

- Dataset Expansion: Adding new test cases based on real customer scenarios

- Language Rule Validation: Testing the impact of language-specific improvements

- Model Update Validation: Verify model updates before they impact customer translations

These quality metrics translate directly into practical benefits: you can ship AI translations confidently without extensive review cycles, your product feels cohesive across languages, and your app won't break due to malformed variables or corrupted formatting. The 94% success rate means translations work well out of the box, letting you focus on building features rather than fixing translation issues.

The main thing is, we're focused on consistently high-quality translations rather than chasing 100% perfection on every edge case (which probably doesn't exist even with human translators). We want reliable quality that lets teams ship great international products without getting stuck in endless review cycles.

Ready to test Localhero.ai?

These evaluation results give us confidence in our approach, but the best proof is trying it with your own content. Our translation engine applies the same quality standards I've shown here to your actual product content.

Try LocalHero.ai with your content and see how your specific terminology, brand voice, and technical requirements are handled.